In addition to the rack architecture contributions, Nvidia is broadening its Spectrum-X networking support for OCP standards.

In the competitive world of enterprise IT vendors, it may be a surprise that some companies are freely sharing their designs, but that’s exactly what the 50-plus voting members and more than 300 community members and startups, including the major hyperscalers and chipmakers, of the Open Compute Project (OCP) foundation are doing.

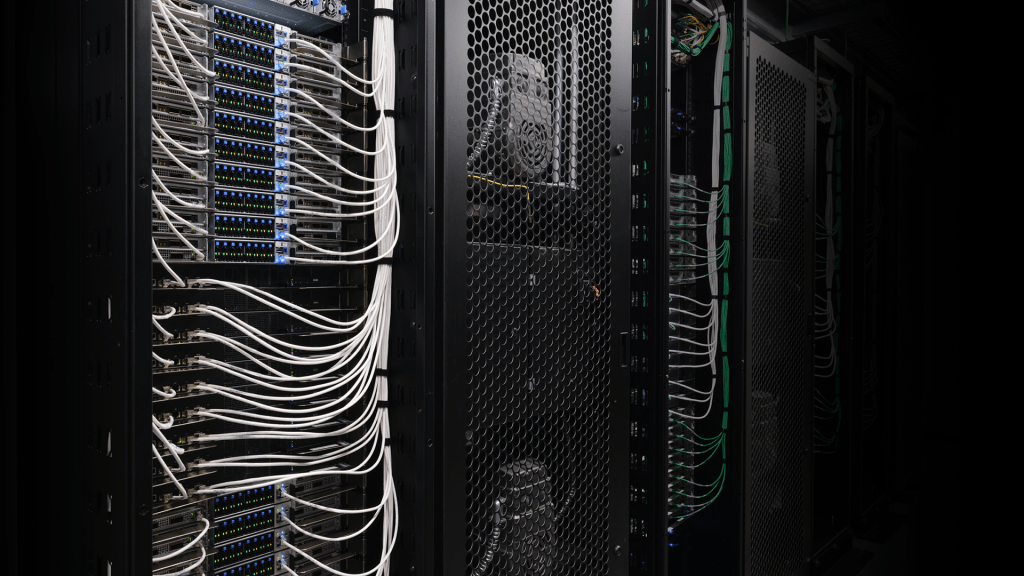

Today at the 2024 OCP Global Summit, Nvidia announced it has contributed its Blackwell GB200 NVL72 electro-mechanical designs – including the rack architecture, compute and switch tray mechanicals, liquid cooling and thermal environment specifications, and Nvidia NVLink cable cartridge volumetrics – to the project.

The NVL72 is a liquid-cooled, rack-scale design that connects 36 Nvidia Grace CPUs and 72 Blackwell GPUs, interconnecting the GPUs via NVSwitch and NVLink to allow them to act as a single massive GPU and deliver faster large language model (LLM) inference.

“One of the key elements of being able to use NVSwitch is we had to keep all of the servers and the compute GPUs very close together so that we could mount them into a single rack,” Shar Narasimhan, director of product marketing, AI and data center GPUs, at Nvidia, explained. “That allowed us to use copper cabling for NVLink and that, in turn, allows us to not only lower the cost, it also lets us use far less power than fiber optics.”

However, to do so, the rack had to be heavily reinforced to handle the extra weight, and the NVLink spine running vertically down the rack had to hold up to 5,000 copper cables. Nvidia also designed quick disconnect and quick release for the plumbing and cables. Power capacity was upgraded to up to 120KW and 1,400 amps, which Narasimhan said is more than double that of current rack designs.

Nvidia also announced that its Spectrum-X ethernet networking platform and the new ConnectX-8 SuperNIC will support OCP’s Switch Abstraction Interface (SAI) and Software for Open Networking in the Cloud (SONiC) standards. The ConnectX-8 SuperNICs for OCP 3.0 will be available next year.

Lastly, Nvidia highlighted partners that have built on its contributions and in turn are contributing those efforts to the OCP, including Meta’s Catalina AI rack.

“When one participant takes another participant’s open design, makes modifications, and then submits that back to the entire ecosystem, we can all benefit and thrive,“ Narasimhan said.

Vertiv will be releasing an energy-efficient reference architecture based on the GB200 NVL72 for AI factories at scale, Narasimhan added.

“By contributing this as a reference architecture, a modular design for an AI factory, Vertiv has helped remove design risk for every other participant,” Narasimhan noted. “They’ve now reduced the design and construction lead time by 50%. In addition to that, they have invested time and energy to optimize the layout for improving cooling usage, minimizing power usage and maximizing the usage of space. By following this particular design, Vertiv has enabled cooling to be made 20% more efficient, and they’ve reduced space usage by 40%.”

“We thank Meta and Vertiv for their own contributions and participation in the open source community, and we welcome continued involvement and participation by all of the members in the computing community.”